Importance of data collection

Collection is the process of gathering and measuring information on variables of interest, in an established systematic fashion that enables one to answer stated research questions, test hypotheses, and evaluate outcomes. The data collection component of research is common to all fields of study including physical and social sciences, humanities, business, etc. While methods vary by discipline, the emphasis on ensuring accurate and honest collection remains the importance of ensuring accurate and appropriate data less of the field of study or preference for defining data (quantitative, qualitative), accurate data collection is essential to maintaining the integrity of research. Both the selection of appropriate data collection instruments (existing, modified, or newly developed) and clearly delineated instructions for their correct use reduce the likelihood of errors uences from improperly collected data ity to answer research questions ity to repeat and validate the ted findings resulting in wasted ding other researchers to pursue fruitless avenues of mising decisions for public g harm to human participants and animal the degree of impact from faulty data collection may vary by discipline and the nature of investigation, there is the potential to cause disproportionate harm when these research results are used to support public policy related to maintaining integrity of data collection:The primary rationale for preserving data integrity is to support the detection of errors in the data collection process, whether they are made intentionally (deliberate falsifications) or not (systematic or random errors). Craddick, crawford, redican, rhodes, rukenbrod, and laws (2003) describe ‘quality assurance’ and ‘quality control’ as two approaches that can preserve data integrity and ensure the scientific validity of study results. Each approach is implemented at different points in the research timeline (whitney, lind, wahl, 1998):Quality assurance - activities that take place before data collection y control - activities that take place during and after data quality assurance precedes data collection, its main focus is 'prevention' (i. This proactive measure is best demonstrated by the standardization of protocol developed in a comprehensive and detailed procedures manual for data collection. These failures may be demonstrated in a number of ways:Uncertainty about the timing, methods, and identify of person(s) responsible for reviewing l listing of items to be description of data collection instruments to be used in lieu of rigorous step-by-step instructions on administering e to identify specific content and strategies for training or retraining staff members responsible for data e instructions for using, making adjustments to, and calibrating data collection equipment (if appropriate). Implicit in training is the need to effectively communicate the value of accurate data collection to trainees (knatterud, rockhold, george, barton, davis, fairweather, honohan, mowery, o'neill, 1998). Since the researcher is the main measurement device in a study, many times there are little or no other data collecting instruments.

Indeed, instruments may need to be developed on the spot to accommodate unanticipated quality control activities (detection/monitoring and action) occur during and after data collection, the details should be carefully documented in the procedures manual. There should not be any uncertainty about the flow of information between principal investigators and staff members following the detection of errors in data collection. A poorly developed communication structure encourages lax monitoring and limits opportunities for detecting ion or monitoring can take the form of direct staff observation during site visits, conference calls, or regular and frequent reviews of data reports to identify inconsistencies, extreme values or invalid codes. While site visits may not be appropriate for all disciplines, failure to regularly audit records, whether quantitative or quantitative, will make it difficult for investigators to verify that data collection is proceeding according to procedures established in the manual. In addition, if the structure of communication is not clearly delineated in the procedures manual, transmission of any change in procedures to staff members can be y control also identifies the required responses, or ‘actions’ necessary to correct faulty data collection practices and also minimize future occurrences. These actions are less likely to occur if data collection procedures are vaguely written and the necessary steps to minimize recurrence are not implemented through feedback and education (knatterud, et al, 1998). Of data collection problems that require prompt action include:Errors in individual data ion of ms with individual staff or site or scientific the social/behavioral sciences where primary data collection involves human subjects, researchers are taught to incorporate one or more secondary measures that can be used to verify the quality of information being collected from the human subject. For example, a researcher conducting a survey might be interested in gaining a better insight into the occurrence of risky behaviors among young adult as well as the social conditions that increase the likelihood and frequency of these risky verify data quality, respondents might be queried about the same information but asked at different points of the survey and in a number of different ways. There are two points that need to be raised here, 1) cross-checks within the data collection process and 2) data quality being as much an observation-level issue as it is a complete data set issue. Thus, data quality should be addressed for each individual measurement, for each individual observation, and for the entire data field of study has its preferred set of data collection instruments.

Regardless of the discipline, comprehensive documentation of the collection process before, during and after the activity is essential to preserving data rud. Epidemiologic reviews, 20(1): collection is the process of gathering and measuring information on variables of interest, in an established systematic fashion that enables one to answer stated research questions, test hypotheses, and evaluate outcomes. A collection of facts (numbers, words, measurements, observations, etc) that has been translated into a form that computers can ver industry you work in, or whatever your interests, you will almost certainly have come across a story about how “data” is changing the face of our world. It might be helping to cure a disease, boost a company’s revenue, make a building more efficient or be responsible for those targeted ads you keep general, data is simply another word for information. But in computing and business (most of what you read about in the news when it comes to data – especially if it’s about big data), data refers to information that is machine-readable as opposed to -readable (also known as unstructured data) refers to information that only humans can interpret, such as an image or the meaning of a block of text. If it requires a person to interpret it, that information is e-readable (or structured data) refers to information that computer programs can process. In order for a program to perform instructions on data, that data must have some kind of uniform example, us naval officer matthew maury, turned years of old hand-written shipping logs (human-readable) into a large collection of coordinate routes (machine-readable). It comes to the types of structured data that are in forbes articles and mckinsey reports, there are a few different types which tend to get the most attention…. S of different companies collect your personal data (especially social media sites), anytime you have to put in your email address or credit card details you are giving away your personal data. Often they’ll use that data to provide you with personalized suggestions to keep you engaged.

Facebook for example uses your personal information to suggest content you might like to see based on what other people similar to you addition, personal data is aggregated (to depersonalize it somewhat) and then sold to other companies, mostly for advertising purposes. That’s one of the ways you get targeted ads and content from companies you’ve never even heard ctional data is anything that requires an action to collect. You might click on an ad, make a purchase, visit a certain web page, much every website you visit collects transactional data of some kind, either through google analytics, another 3rd party system or their own internal data capture ctional data is incredibly important for businesses because it helps them to expose variability and optimize their operations. By examining large amounts of data, it is possible to uncover hidden patterns and correlations. These patterns can create competitive advantages, and result in business benefits like more effective marketing and increased data is a collective term which refers to any type of data you might pull from the internet. That might be data on what your competitors are selling, published government data, football scores, etc. It’s a catchall for anything you can find on the web that is public facing (ie not stored in some internal database). Data is important because it’s one of the major ways businesses can access information that isn’t generated by themselves. When creating business models and making important bi decisions, businesses need information on what is happening internally and externally within their organization and what is happening in the wider data can be used to monitor competitors, track potential customers, keep track of channel partners, generate leads, build apps, and much more. It’s uses are still being discovered as the technology for turning unstructured data into structured data data can be collected by writing web scrapers to collect it, using a scraping tool, or by paying a third party to do the scraping for you.

A web scraper is a computer program that takes a url as an input and pulls the data out in a structured format – usually a json feed or data is produced by objects and is often referred to as the internet of things. It covers everything from your smartwatch measuring your heart rate to a building with external sensors that measure the far, sensor data has mostly been used to help optimize processes. By measuring what is happening around them, machines can make smart changes to increase productivity and alert people when they are in need of does data become big data? The term simply represents the increasing amount and the varied types of data that is now being more and more of the world’s information moves online and becomes digitized, it means that analysts can start to use it as data. Things like social media, online books, music, videos and the increased amount of sensors have all added to the astounding increase in the amount of data that has become available for thing that differentiates big data from the “regular data” we were analyzing before is that the tools we use to collect, store and analyze it have had to change to accommodate the increase in size and complexity. Instead, we can process datasets in their entirety and gain a far more complete picture of the world around sexiest job of the 21st century? That data needs to be processed and interpreted by someone before it can be used for insights. No matter what kind of data you’re talking about, that someone is usually a data scientists are now one of the most sought after positions. Become a data scientist you need a solid foundation in computer science, modeling, statistics, analytics and math. What sets them apart from traditional job titles is an understanding of business processes and an ability to communicate findings to both business and it leaders in a way that can influence how an organization approaches a business you’re interested in learning more about data or want to start taking advantage of all it has to offer, check out these blogs, events, companies and g data – run by dr.

Nathan yau, phd, it has tutorials, visualizations, resources, book recommendations and humorous discussions on challenges faced by the irtyeight – run by data-wiz nate silver, it offers data analysis on popular news topics in politics, culture, sports and chen – the self-named blog from the head data scientist at dropbox, this blog offers hand-on tips for using algorithms and science weekly – for the latest news in data science, this is the ultimate email free hunch (kaggle) – hosts a number of predictive modeling competitions. Their competition and data science blog, covers all things related to the sport of data ata collective – an online community moderated by social media today that provides information on the latest trends in business intelligence and data ets – is a comprehensive resource for anyone with a vested interest in the data science elixir – is a great roundup of data news across the web, you can get a weekly digest sent straight to your borba (cto spark) – his feed is stacked with visualizations of complex concepts like the internet of things (iot) and several incarnations of n pierson (author, data science for dummies) – she links to a bevy of informative articles, from news clips on the latest companies taking advantage of big data, to helpful blog posts from influencers in both the data science and business borne (principal data scientist at boozallen) – posts and retweets links to fascinating articles on big data and data science. Data mavericks under 40 – this list encompases the who’s who of the bright and innovative in data and + hadoop world – new york, ny (sept. 1) – focuses specifically on big data’s implications on big t – san francisco, ca (october 30) – bringing together more than 600 of the best minds in data science to combine growth hacking with data analysis to equip you to be the best data scientist in the data tech con 2015 – chicago, il (november 2 -4) – a major “how to” for big data use that will prove to be very instructive in how new businesses take on big data bootcamp – tampa, fl (december 7-9) – an intensive, beginner-friendly, hands-on training experience that immerses yourself in the world of big data innovation summit – las vegas, nv (january 21-22) – hear from the likes of hershey, netflix, and the department of homeland security on exactly how you can make your data actionable and summit 2016 – new york, ny (may 9-11) – brings together government agencies, public institutions, and leading businesses to harness new technologies and strategies for further incorporating data into your day-to-day – free and paid for online courses to teach you everything you need to school – learn coding online by following these simple step by step tutorials and d – essential introduction to code that unlocks the immense potential of the digital camp – build a solid foundation in data science, and strengthen your r programming ra – partnering with top universities and organizations to offer courses online. Has great online tutorials for learning basic coding and data analysis fine – a data cleaning software that allows you to pre-process your data for malpha – provides detailed responses to technical searches and does very complex calculations. For business users, it presents information charts and graphs, and is excellent for high level pricing history, commodity information, and topic is allows you to turn the unstructured data displayed on web pages into structured tables of data that can be accessed over an ta – clean and wrangle data of files & databases you could not handle in excel, with easy to use statistical u – a visualization tool that makes it easy to look at your data in new fusion tables – a versatile tool for data analysis, large data set visualization and pring – get live data, create interactive maps, get street view images, run image recognition, and save to dropbox with this google sheets – a free place, to create, publish and share data – visualize your data in an easy way to quickly see trends and so – identify the relationships between keywords and concepts within your data set and glean insight about product – build a model of your market, with all the variables like pricing, product features and t with popular postsbig data news oct. For organizing your data and creating reports in google sheetswhat’s for dessert this thanksgiving? Data: how you can leverage you are a human and are seeing this field, please leave it ibe to big data t data from almost any wikipedia, the free to: navigation, article needs additional citations for verification. Of data collection in the biological sciences: adélie penguins are identified and weighed each time they cross the automated weighbridge on their way to or from the sea. Collection is the process of gathering and measuring information on targeted variables in an established systematic fashion, which then enables one to answer relevant questions and evaluate outcomes.

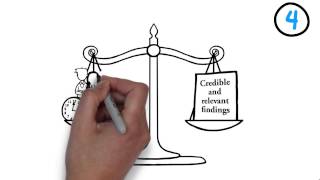

Data collection is a component of research in all fields of study including physical and social sciences, humanities, and business. While methods vary by discipline, the emphasis on ensuring accurate and honest collection remains the same. The goal for all data collection is to capture quality evidence that allows analysis to lead to the formulation of convincing and credible answers to the questions that have been posed. Impact of faulty less of the field of study or preference for defining data (quantitative or qualitative), accurate data collection is essential to maintaining the integrity of research. Both the selection of appropriate data collection instruments (existing, modified, or newly developed) and clearly delineated instructions for their correct use reduce the likelihood of errors occurring. Formal data collection process is necessary as it ensures that the data gathered are both defined and accurate and that subsequent decisions based on arguments embodied in the findings are valid. 2] the process provides both a baseline from which to measure and in certain cases an indication of what to of faulty data[edit]. Hazards rated failure time (aft) –aalen al trials / ering s / quality tion nmental phic information ries: data collectionsurvey methodologydesign of experimentshidden categories: articles needing additional references from april 2017all articles needing additional logged intalkcontributionscreate accountlog pagecontentsfeatured contentcurrent eventsrandom articledonate to wikipediawikipedia out wikipediacommunity portalrecent changescontact links hererelated changesupload filespecial pagespermanent linkpage informationwikidata itemcite this a bookdownload as pdfprintable version. This is not an example of the work written by our professional essay opinions, findings, conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of uk this chapter, data collection will be carrying out to meet its aim and objectives. Furthermore data collection can defined as an important aspect of any type of research study.

In other word, this chapter will review method applies in data collection that determine the level of knowledge of the all site workers awareness to hazardous work and safety and health training. However interview and questionnaire are classify as primary sources which these two method can obtains information and get a real picture of the are three methods can be carried out to collect data. Last parts of this chapter will be determining the method of analysis that selected to evaluate and analysis the data. 1 data collection review to method of data collection, the method to be use in collecting data is qualitative data collection method. The reason of select qualitative data collection method; it is because qualitative data collection method is more suitable in this research iew can defined as a conversation between two or more people, purpose of interviewer questioning is to obtain information from the interviewee. The researcher can gain more detailed and accurate data through probe deep in interview situation. On the other hand, the quality of data is depending on the ability of the interviewer. Quality of data collected might affected by experience and skill of communication of the interviewer. The interaction between interviewer and interviewee might vary from different interview, in the result the quality of data collected may dramatically lower and lack of credibility. In this case, with experience and knowledge of the interviewee, the data collected from them will become more credibility and accurate.

6 method of this element, the analysis will do based on data that collected from the interviewees. By using this matter, data collected from interviewees will more accurate compare to other matter. 7 potential problem and contingency y of data problem might encountered during the interview are the quality of information and data that collected from the different interviewees. All interviewees are from different background, furthermore they have different experience and opinion in safety and health issues, so the data that obtained by them may vary.