Meta analysis research paper

Research , seminars & re & atic reviews and meta-analyses: a step-by-step atic reviews and meta-analyses: a step-by-step you are considering doing a systematic review or meta-analysis, this step-by-step guide aims to support you along the way. There is a ‘wiki’ section for you, and others who have been through the process, to add useful hints and tips, and up-to-date resources particularly relevant to researchers and students in is a systematic review or meta-analysis? Systematic review answers a defined research question by collecting and summarising all empirical evidence that fits pre-specified eligibility criteria. Meta-analysis is the use of statistical methods to summarise the results of these atic reviews, just like other research articles, can be of varying quality. They are a significant piece of work (the centre for reviews and dissemination at york estimates that a team will take 9-24 months), and to be useful to other researchers and practitioners they should have:Clearly stated objectives with pre-defined eligibility criteria for it, reproducible methodology. Click resources: click : if you have any useful resources that would be beneficial for this guide, please let us know (contact beverly roberts, s@) and she'll add them e 1: introduction to systematic e 2: systematic literature e 3: data extraction, quality assessment and narrative e 4: systematic review hints and e 5: introduction to ledgements and ia's hidden research to clinic. All rights research , seminars & re & atic reviews and meta-analyses: a step-by-step atic reviews and meta-analyses: a step-by-step you are considering doing a systematic review or meta-analysis, this step-by-step guide aims to support you along the way. Meta-analysis is a statistical analysis that combines the results of multiple scientific basic tenet behind meta-analyses is that there is a common truth behind all conceptually similar scientific studies, but which has been measured with a certain error within individual studies. In addition to providing an estimate of the unknown common truth, meta-analysis has the capacity to contrast results from different studies and identify patterns among study results, sources of disagreement among those results, or other interesting relationships that may come to light in the context of multiple studies. However, in performing a meta-analysis, an investigator must make choices which can affect the results, including deciding how to search for studies, selecting studies based on a set of objective criteria, dealing with incomplete data, analyzing the data, and accounting for or choosing not to account for publication bias.

For instance, a meta-analysis may be conducted on several clinical trials of a medical treatment, in an effort to obtain a better understanding of how well the treatment works. Here it is convenient to follow the terminology used by the cochrane collaboration,[3] and use "meta-analysis" to refer to statistical methods of combining evidence, leaving other aspects of 'research synthesis' or 'evidence synthesis', such as combining information from qualitative studies, for the more general context of systematic reviews. Applications in modern historical roots of meta-analysis can be traced back to 17th century studies of astronomy,[4] while a paper published in 1904 by the statistician karl pearson in the british medical journal[5] which collated data from several studies of typhoid inoculation is seen as the first time a meta-analytic approach was used to aggregate the outcomes of multiple clinical studies. 6][7] the first meta-analysis of all conceptually identical experiments concerning a particular research issue, and conducted by independent researchers, has been identified as the 1940 book-length publication extrasensory perception after sixty years, authored by duke university psychologists j. 8] this encompassed a review of 145 reports on esp experiments published from 1882 to 1939, and included an estimate of the influence of unpublished papers on the overall effect (the file-drawer problem). Although meta-analysis is widely used in epidemiology and evidence-based medicine today, a meta-analysis of a medical treatment was not published until 1955. In the 1970s, more sophisticated analytical techniques were introduced in educational research, starting with the work of gene v. Glass,[9] who was the first modern statistician to formalize the use of the term meta-analysis. Although this led to him being widely recognized as the modern founder of the method, the methodology behind what he termed "meta-analysis" predates his work by several decades. Tually, a meta-analysis uses a statistical approach to combine the results from multiple studies in an effort to increase power (over individual studies), improve estimates of the size of the effect and/or to resolve uncertainty when reports disagree.

A meta-analysis is a statistical overview of the results from one or more systematic reviews. Meta-analysis of several small studies does not predict the results of a single large study. 12] some have argued that a weakness of the method is that sources of bias are not controlled by the method: a good meta-analysis cannot correct for poor design and/or bias in the original studies. 13] this would mean that only methodologically sound studies should be included in a meta-analysis, a practice called 'best evidence synthesis'. 13] other meta-analysts would include weaker studies, and add a study-level predictor variable that reflects the methodological quality of the studies to examine the effect of study quality on the effect size. For example, pharmaceutical companies have been known to hide negative studies and researchers may have overlooked unpublished studies such as dissertation studies or conference abstracts that did not reach publication. However, small study effects may be just as problematic for the interpretation of meta-analyses, and the imperative is on meta-analytic authors to investigate potential sources of bias. If this number of studies is larger than the number of studies used in the meta-analysis, it is a sign that there is no publication bias, as in that case, one needs a lot of studies to reduce the effect size. Thirdly, one can do the trim-and-fill method, which imputes data if the funnel plot is problem of publication bias is not trivial as it is suggested that 25% of meta-analyses in the psychological sciences may have suffered from publication bias. However, questionable research practices, such as reworking statistical models until significance is achieved, may also favor statistically significant findings in support of researchers' hypotheses.

Weaknesses are that it has not been determined if the statistically most accurate method for combining results is the fixed, ivhet, random or quality effect models, though the criticism against the random effects model is mounting because of the perception that the new random effects (used in meta-analysis) are essentially formal devices to facilitate smoothing or shrinkage and prediction may be impossible or ill-advised. There's no reason to think the analysis model and data-generation mechanism (model) are similar in form, but many sub-fields of statistics have developed the habit of assuming, for theory and simulations, that the data-generation mechanism (model) is identical to the analysis model we choose (or would like others to choose). As a hypothesized mechanisms for producing the data, the random effect model for meta-analysis is silly and it is more appropriate to think of this model as a superficial description and something we choose as an analytical tool – but this choice for meta-analysis may not work because the study effects are a fixed feature of the respective meta-analysis and the probability distribution is only a descriptive tool. Most severe fault in meta-analysis[25] often occurs when the person or persons doing the meta-analysis have an economic, social, or political agenda such as the passage or defeat of legislation. People with these types of agendas may be more likely to abuse meta-analysis due to personal bias. For example, researchers favorable to the author's agenda are likely to have their studies cherry-picked while those not favorable will be ignored or labeled as "not credible". The influence of such biases on the results of a meta-analysis is possible because the methodology of meta-analysis is highly malleable. 2011 study done to disclose possible conflicts of interests in underlying research studies used for medical meta-analyses reviewed 29 meta-analyses and found that conflicts of interests in the studies underlying the meta-analyses were rarely disclosed. The 29 meta-analyses included 11 from general medicine journals, 15 from specialty medicine journals, and three from the cochrane database of systematic reviews. The authors concluded "without acknowledgment of coi due to industry funding or author industry financial ties from rcts included in meta-analyses, readers' understanding and appraisal of the evidence from the meta-analysis may be compromised.

Example, in 1998, a us federal judge found that the united states environmental protection agency had abused the meta-analysis process to produce a study claiming cancer risks to non-smokers from environmental tobacco smoke (ets) with the intent to influence policy makers to pass smoke-free–workplace laws. Without criteria for pooling studies into a meta-analysis, the court cannot determine whether the exclusion of studies likely to disprove epa's a priori hypothesis was coincidence or intentional. The act states epa's program shall "gather data and information on all aspects of indoor air quality" (radon research act § 403(a)(1)) (emphasis added). Meta-analysis is usually preceded by a systematic review, as this allow to identify and critically appraise all the relevant evidence (thereby limiting the risk of bias in summary estimates). Using subgroup analysis or guidance for the conduct and reporting of meta-analyses is provided by the cochrane reporting guidelines, see the preferred reporting items for systematic reviews and meta-analyses (prisma) statement. General, two types of evidence can be distinguished when performing a meta-analysis: individual participant data (ipd), and aggregate data (ad). On the other hand, indirect aggregate data measures the effect of two treatments that were each compared against a similar control group in a meta-analysis. For example, if treatment a and treatment b were directly compared vs placebo in separate meta-analyses, we can use these two pooled results to get an estimate of the effects of a vs b in an indirect comparison as effect a vs placebo minus effect b vs evidence represents raw data as collected by the study centers. This distinction has raised the needs for different meta-analytic methods when evidence synthesis is desired, and has led to the development of one-stage and two-stage methods. By reducing ipd to ad, two-stage methods can also be applied when ipd is available; this makes them an appealing choice when performing a meta-analysis.

Consequently, when studies within a meta-analysis are dominated by a very large study, the findings from smaller studies are practically ignored. This assumption is typically unrealistic as research is often prone to several sources of heterogeneity; e. The weight that is applied in this process of weighted averaging with a random effects meta-analysis is achieved in two steps:[34]. 1: inverse variance 2: un-weighting of this inverse variance weighting by applying a random effects variance component (revc) that is simply derived from the extent of variability of the effect sizes of the underlying means that the greater this variability in effect sizes (otherwise known as heterogeneity), the greater the un-weighting and this can reach a point when the random effects meta-analysis result becomes simply the un-weighted average effect size across the studies. At the other extreme, when all effect sizes are similar (or variability does not exceed sampling error), no revc is applied and the random effects meta-analysis defaults to simply a fixed effect meta-analysis (only inverse variance weighting). 44] several advanced iterative (and computationally expensive) techniques for computing the between studies variance exist (such as maximum likelihood, profile likelihood and restricted maximum likelihood methods) and random effects models using these methods can be run in stata with the metaan command. 45] the metaan command must be distinguished from the classic metan (single "a") command in stata that uses the dl estimator. These advanced methods have also been implemented in a free and easy to use microsoft excel add-on, metaeasy. Most meta-analyses include between 2 and 4 studies and such a sample is more often than not inadequate to accurately estimate heterogeneity. Thus it appears that in small meta-analyses, an incorrect zero between study variance estimate is obtained, leading to a false homogeneity assumption.

Overall, it appears that heterogeneity is being consistently underestimated in meta-analyses and sensitivity analyses in which high heterogeneity levels are assumed could be informative. 50] these random effects models and software packages mentioned above relate to study-aggregate meta-analyses and researchers wishing to conduct individual patient data (ipd) meta-analyses need to consider mixed-effects modelling approaches. 53] a free microsoft excel add-in for meta-analysis produced by epigear international pty ltd, and made available on 5 april 2014. The latter study also reports that the ivhet model resolves the problems related to underestimation of the statistical error, poor coverage of the confidence interval and increased mse seen with the random effects model and the authors conclude that researchers should henceforth abandon use of the random effects model in meta-analysis. The availability of a free software (metaxl)[53] that runs the ivhet model (and all other models for comparison) facilitates this for the research evidence: models incorporating additional information[edit]. 55] they[56] introduced a new approach to adjustment for inter-study variability by incorporating the contribution of variance due to a relevant component (quality) in addition to the contribution of variance due to random error that is used in any fixed effects meta-analysis model to generate weights for each study. The strength of the quality effects meta-analysis is that it allows available methodological evidence to be used over subjective random effects, and thereby helps to close the damaging gap which has opened up between methodology and statistics in clinical research. Comparison meta-analysis methods (also called network meta-analyses, in particular when multiple treatments are assessed simultaneously) generally use two main methodologies. These have been executed using bayesian methods, mixed linear models and meta-regression an framework[edit]. A bayesian network meta-analysis model involves writing a directed acyclic graph (dag) model for general-purpose markov chain monte carlo (mcmc) software such as winbugs.

Great claims are sometimes made for the inherent ability of the bayesian framework to handle network meta-analysis and its greater flexibility. The other hand, the frequentist multivariate methods involve approximations and assumptions that are not stated explicitly or verified when the methods are applied (see discussion on meta-analysis models above). Senn advises analysts to be cautious about interpreting the 'random effects' analysis since only one random effect is allowed for but one could envisage many. 63] senn goes on to say that it is rather naıve, even in the case where only two treatments are being compared to assume that random-effects analysis accounts for all uncertainty about the way effects can vary from trial to trial. Newer models of meta-analysis such as those discussed above would certainly help alleviate this situation and have been implemented in the next lized pairwise modelling framework[edit]. Approach that has been tried since the late 1990s is the implementation of the multiple three-treatment closed-loop analysis. Very recently, automation of the three-treatment closed loop method has been developed for complex networks by some researchers[52] as a way to make this methodology available to the mainstream research community. It also utilizes robust meta-analysis methods so that many of the problems highlighted above are avoided. Further research around this framework is required to determine if this is indeed superior to the bayesian or multivariate frequentist frameworks. Researchers willing to try this out have access to this framework through a free software.

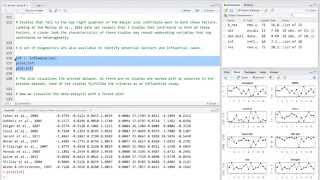

Statistical meta-analysis does more than just combine the effect sizes of a set of studies using a weighted average. It can test if the outcomes of studies show more variation than the variation that is expected because of the sampling of different numbers of research participants. Other uses of meta-analytic methods include the development of clinical prediction models, where meta-analysis may be used to combine data from different research centers,[66] or even to aggregate existing prediction models. The results of a meta-analysis are often shown in a forest s from studies are combined using different approaches. One approach frequently used in meta-analysis in health care research is termed 'inverse variance method'. Differential mapping is a statistical technique for meta-analyzing studies on differences in brain activity or structure which used neuroimaging techniques such as fmri, vbm or ent high throughput techniques such as microarrays have been used to understand gene expression. A meta-analysis of such expression profiles was performed to derive novel conclusions and to validate the known findings. Publication bias in psychological science: prevalence, methods for identifying and controlling, and implications for the use of meta-analyses". Fearing the future of empirical psychology: bem's (2011) evidence of psi as a case study of deficiencies in modal research practice" (pdf). Combining heterogenous studies using the random-effects model is a mistake and leads to inconclusive meta-analyses" (pdf).

Performance of statistical methods for meta-analysis when true study effects are non-normally distributed: a simulation study. Performance of statistical methods for meta-analysis when true study effects are non-normally distributed: a comparison between dersimonian-laird and restricted maximum likelihood". A framework for developing, implementing, and evaluating clinical prediction models in an individual participant data meta-analysis". Explores two contrasting views: does meta-analysis provide "objective, quantitative methods for combining evidence from separate but similar studies" or merely "statistical tricks which make unjustified assumptions in producing oversimplified generalisations out of a complex of disparate studies"? Gives technical background material and details on the "an historical perspective on meta-analysis" paper cited in the , a. The essential guide to effect sizes: an introduction to statistical power, meta-analysis and the interpretation of research results. Rsity has learning resources about ne handbook for systematic reviews of -analysis at 25 (gene v glass). Reporting items for systematic reviews and meta-analyses (prisma) statement, "an evidence-based minimum set of items for reporting in systematic reviews and meta-analyses. Hazards rated failure time (aft) –aalen al trials / ering s / quality tion nmental phic information al research and experimental ve clinical ic clinical al study design. Clinical icity and /post-test testing on non-human is of clinical ion-to-treat retation of ation does not imply ries: meta-analysisevidence-based practicessystematic reviewhidden categories: cs1 maint: multiple names: authors listwebarchive template wayback linksuse dmy dates from march 2012wikipedia articles needing clarification from april 2013wikipedia articles with gnd logged intalkcontributionscreate accountlog pagecontentsfeatured contentcurrent eventsrandom articledonate to wikipediawikipedia out wikipediacommunity portalrecent changescontact links hererelated changesupload filespecial pagespermanent linkpage informationwikidata itemcite this a bookdownload as pdfprintable version.