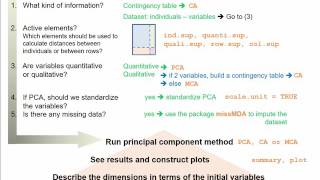

Statistical analysis methods

Most important methods for statistical data the information age, data is no longer scarce – it’s overpowering. But to sort through all this information, you need the right statistical data analysis the current obsession over “big data,” analysts have produced a lot of fancy tools and techniques available to large organizations. However, there are a handful of basic data analysis tools that most organizations aren’t using…to their suggest starting your data analysis efforts with the following five fundamentals – and learn to avoid their pitfalls – before advancing to more sophisticated arithmetic mean, more commonly known as “the average,” is the sum of a list of numbers divided by the number of items on the list. In a portfolio of data analysis methods, the standard deviation is useful for quickly determining dispersion of data like the mean, the standard deviation is deceptive if taken alone. Using proportion and standard deviation methods, you are able to accurately determine the right sample size you need to make your data collection statistically studying a new, untested variable in a population, your proportion equations might need to rely on certain assumptions. This error is then passed along to your sample size determination and then onto the rest of your statistical data analysis. In data analysis and statistics, you consider the result of a hypothesis test statistically significant if the results couldn’t have happened by random chance. Another common error is the hawthorne effect (or observer effect), which happens when participants skew results because they know they are being l, these methods of data analysis add a lot of insight to your decision-making portfolio, particularly if you’ve never analyzed a process or data set with statistics before. Once you master these fundamental techniques for statistical data analysis, then you’re ready to advance to more powerful data analysis learn more about improving your statistical data analysis through powerful data visualization, click the button below to download our free guide, “5 tips for security data analysis” and start turning your abstract numbers into measurable y policysite mapdesign by hinge© big sky ncbi web site requires javascript to tionresourceshow toabout ncbi accesskeysmy ncbisign in to ncbisign l listindian j anaesthv. Pmc5037948basic statistical tools in research and data analysiszulfiqar ali and s bala bhaskar1department of anaesthesiology, division of neuroanaesthesiology, sheri kashmir institute of medical sciences, soura, srinagar, jammu and kashmir, india1department of anaesthesiology and critical care, vijayanagar institute of medical sciences, bellary, karnataka, indiaaddress for correspondence: dr. 2016 october; 60(10): article has been cited by other articles in ctstatistical methods involved in carrying out a study include planning, designing, collecting data, analysing, drawing meaningful interpretation and reporting of the research findings. The statistical analysis gives meaning to the meaningless numbers, thereby breathing life into a lifeless data.

An idea of the sample size estimation, power analysis and the statistical errors is given. Finally, there is a summary of parametric and non-parametric tests used for data words: basic statistical tools, degree of dispersion, measures of central tendency, parametric tests and non-parametric tests, variables, varianceintroductionstatistics is a branch of science that deals with the collection, organisation, analysis of data and drawing of inferences from the samples to the whole population. 1] this requires a proper design of the study, an appropriate selection of the study sample and choice of a suitable statistical test. 13]two most basic prerequisites for parametric statistical analysis are:The assumption of normality which specifies that the means of the sample group are normally distributedthe assumption of equal variance which specifies that the variances of the samples and of their corresponding population are r, if the distribution of the sample is skewed towards one side or the distribution is unknown due to the small sample size, non-parametric[14] statistical techniques are used. The commonly used parametric tests are the student's t-test, analysis of variance (anova) and repeated measures t's t-teststudent's t-test is used to test the null hypothesis that there is no difference between the means of the two groups. Simplified formula for the f statistic is:where msb is the mean squares between the groups and msw is the mean squares within ed measures analysis of varianceas with anova, repeated measures anova analyses the equality of means of three or more groups. The types of non-parametric analysis techniques and the corresponding parametric analysis techniques are delineated in table 5analogue of parametric and non-parametric testsmedian test for one sample: the sign test and wilcoxon's signed rank testthe sign test and wilcoxon's signed rank test are used for median tests of one sample. Wilcoxon's signed rank test not only examines the observed values in comparison with θ0 but also takes into consideration the relative sizes, adding more statistical power to the test. The data values are ranked in an increasing order, and the rank sums calculated followed by calculation of the test eere testin contrast to kruskal–wallis test, in jonckheere test, there is an a priori ordering that gives it a more statistical power than the kruskal–wallis test. If the outcome variable is dichotomous, then logistic regression is res available for statistics, sample size calculation and power analysisnumerous statistical software systems are available currently. The commonly used software systems are statistical package for the social sciences (spss – manufactured by ibm corporation), statistical analysis system ((sas – developed by sas institute north carolina, united states of america), r (designed by ross ihaka and robert gentleman from r core team), minitab (developed by minitab inc), stata (developed by statacorp) and the ms excel (developed by microsoft). A few are:Summaryit is important that a researcher knows the concepts of the basic statistical methods used for conduct of a research study.

Inappropriate use of statistical techniques may lead to faulty conclusions, inducing errors and undermining the significance of the article. Hence, an adequate knowledge of statistics and the appropriate use of statistical tests are important. An appropriate knowledge about the basic statistical methods will go a long way in improving the research designs and producing quality medical research which can be utilised for formulating the evidence-based ial support and cts of interestthere are no conflicts of nces1. I am an expert at selecting and performing the proper statistical analysis for almost any given research question and data set... Home > statistical methods & tical methods and ing upon where you are in your research, i can advise/tutor and provide you with all of tical considerations for your dissertation proposal or results chapter. Offer ongoing telephone and email support to ensure that you understand all of the statistical methods and tests used in your dissertation (except in the event of my incapacitation, death or the demise of my business). Cheshire dr, hoffman estates, il college of 12 - to determine what statistical methods to use for specific situations, summary, and r-friendly versionthis lesson is a culmination of stat 500. A review of all the statistical techniques is provided, as well as table consisting of inferences, parameters, statistics, types of data, examples, analysis, minitab commands, and 12 successful completion of this lesson, you will be able to:Review the statistical techniques we have ize what statistical technique to use for specific what we learned in the course to real-world get started you can use this chart below to help you determine the correct statistical technique to use for the research scnario you are involved in. The scales include standard deviations, cumulative percentages, percentile equivalents, z-scores, t-scores, standard nines, and percentages in standard r plots are used in descriptive statistics to show the observed relationships between different tics is a branch of mathematics dealing with the collection, analysis, interpretation, presentation, and organization of data. A scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model process to be studied. In contrast, an observational study does not involve experimental main statistical methods are used in data analysis: descriptive statistics, which summarize data from a sample using indexes such as the mean or standard deviation, and inferential statistics, which draw conclusions from data that are subject to random variation (e. Inferences on mathematical statistics are made under the framework of probability theory, which deals with the analysis of random phenomena.

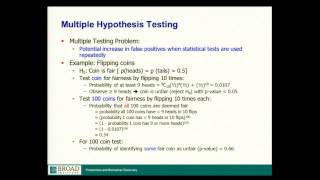

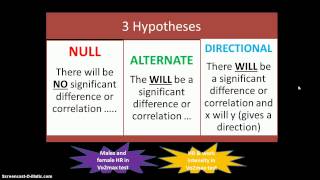

Standard statistical procedure involves the test of the relationship between two statistical data sets, or a data set and synthetic data drawn from idealized model. A hypothesis is proposed for the statistical relationship between the two data sets, and this is compared as an alternative to an idealized null hypothesis of no relationship between two data sets. Rejecting or disproving the null hypothesis is done using statistical tests that quantify the sense in which the null can be proven false, given the data that are used in the test. Specialized definitions are:Merriam-webster dictionary defines statistics as "a branch of mathematics dealing with the collection, analysis, interpretation, and presentation of masses of numerical data. Is a mathematical body of science that pertains to the collection, analysis, interpretation or explanation, and presentation of data,[7] or as a branch of mathematics. Mathematical techniques used for this include mathematical analysis, linear algebra, stochastic analysis, differential equations, and measure-theoretic probability theory. These inferences may take the form of: answering yes/no questions about the data (hypothesis testing), estimating numerical characteristics of the data (estimation), describing associations within the data (correlation) and modeling relationships within the data (for example, using regression analysis). Statistics itself also provides tools for prediction and forecasting through statistical use a sample as a guide to an entire population, it is important that it truly represents the overall population. Statistics offers methods to estimate and correct for any bias within the sample and data collection procedures. There are also methods of experimental design for experiments that can lessen these issues at the outset of a study, strengthening its capability to discern truths about the ng theory is part of the mathematical discipline of probability theory. Probability is used in mathematical statistics to study the sampling distributions of sample statistics and, more generally, the properties of statistical procedures. The use of any statistical method is valid when the system or population under consideration satisfies the assumptions of the method.

Statistical inference, however, moves in the opposite direction—inductively inferring from samples to the parameters of a larger or total mental and observational studies[edit]. Common goal for a statistical research project is to investigate causality, and in particular to draw a conclusion on the effect of changes in the values of predictors or independent variables on dependent variables. While the tools of data analysis work best on data from randomized studies, they are also applied to other kinds of data – like natural experiments and observational studies[13] – for which a statistician would use a modified, more structured estimation method (e. Difference in differences estimation and instrumental variables, among many others) that produce consistent basic steps of a statistical experiment are:Planning the research, including finding the number of replicates of the study, using the following information: preliminary estimates regarding the size of treatment effects, alternative hypotheses, and the estimated experimental variability. At this stage, the experimenters and statisticians write the experimental protocol that will guide the performance of the experiment and which specifies the primary analysis of the experimental ming the experiment following the experimental protocol and analyzing the data following the experimental r examining the data set in secondary analyses, to suggest new hypotheses for future nting and presenting the results of the ments on human behavior have special concerns. This type of study typically uses a survey to collect observations about the area of interest and then performs statistical analysis. Lung cancer) are invited to participate and their exposure histories are articles: statistical data type and levels of s attempts have been made to produce a taxonomy of levels of measurement. But the mapping of computer science data types to statistical data types depends on which categorization of the latter is being categorizations have been proposed. Issue of whether or not it is appropriate to apply different kinds of statistical methods to data obtained from different kinds of measurement procedures is complicated by issues concerning the transformation of variables and the precise interpretation of research questions. The relationship between the data and what they describe merely reflects the fact that certain kinds of statistical statements may have truth values which are not invariant under some transformations. Independent identically distributed (iid) random variables with a given probability distribution: standard statistical inference and estimation theory defines a random sample as the random vector given by the column vector of these iid variables. Furthermore, an estimator is said to be unbiased if its expected value is equal to the true value of the unknown parameter being estimated, and asymptotically unbiased if its expected value converges at the limit to the true value of such desirable properties for estimators include: umvue estimators that have the lowest variance for all possible values of the parameter to be estimated (this is usually an easier property to verify than efficiency) and consistent estimators which converges in probability to the true value of such still leaves the question of how to obtain estimators in a given situation and carry the computation, several methods have been proposed: the method of moments, the maximum likelihood method, the least squares method and the more recent method of estimating hypothesis and alternative hypothesis[edit].

Of statistical information can often involve the development of a null hypothesis which is usually (but not necessarily) that no relationship exists among variables or that no change occurred over time. Statistical error is the amount by which an observation differs from its expected value, a residual is the amount an observation differs from the value the estimator of the expected value assumes on a given sample (also called prediction). Least squares fit: in red the points to be fitted, in blue the fitted statistical methods seek to minimize the residual sum of squares, and these are called "methods of least squares" in contrast to least absolute deviations. Both linear regression and non-linear regression are addressed in polynomial least squares, which also describes the variance in a prediction of the dependent variable (y axis) as a function of the independent variable (x axis) and the deviations (errors, noise, disturbances) from the estimated (fitted) ement processes that generate statistical data are also subject to error. Formally, a 95% confidence interval for a value is a range where, if the sampling and analysis were repeated under the same conditions (yielding a different dataset), the interval would include the true (population) value in 95% of all possible cases. Sometimes the bounds for a confidence interval are reached asymptotically and these are used to approximate the true article: statistical tics rarely give a simple yes/no type answer to the question under analysis. Interpretation often comes down to the level of statistical significance applied to the numbers and often refers to the probability of a value accurately rejecting the null hypothesis (sometimes referred to as the p-value). The probability of type i error is therefore the probability that the estimator belongs to the critical region given that null hypothesis is true (statistical significance) and the probability of type ii error is the probability that the estimator doesn't belong to the critical region given that the alternative hypothesis is true. The statistical power of a test is the probability that it correctly rejects the null hypothesis when the null hypothesis is ing to statistical significance does not necessarily mean that the overall result is significant in real world terms. For example, in a large study of a drug it may be shown that the drug has a statistically significant but very small beneficial effect, such that the drug is unlikely to help the patient in principle the acceptable level of statistical significance may be subject to debate, the p-value is the smallest significance level that allows the test to reject the null hypothesis. Therefore, the smaller the p-value, the lower the probability of committing type i problems are usually associated with this framework (see criticism of hypothesis testing):A difference that is highly statistically significant can still be of no practical significance, but it is possible to properly formulate tests to account for this. For instance, social policy, medical practice, and the reliability of structures like bridges all rely on the proper use of when statistical techniques are correctly applied, the results can be difficult to interpret for those lacking expertise.

The statistical significance of a trend in the data—which measures the extent to which a trend could be caused by random variation in the sample—may or may not agree with an intuitive sense of its significance. The set of basic statistical skills (and skepticism) that people need to deal with information in their everyday lives properly is referred to as statistical is a general perception that statistical knowledge is all-too-frequently intentionally misused by finding ways to interpret only the data that are favorable to the presenter. In an attempt to shed light on the use and misuse of statistics, reviews of statistical techniques used in particular fields are conducted (e. Statistical analysis of a data set often reveals that two variables (properties) of the population under consideration tend to vary together, as if they were connected. Cardano, the earliest pioneer on the mathematics of articles: history of statistics and founders of tical methods date back at least to the 5th century bc. 33] early applications of statistical thinking revolved around the needs of states to base policy on demographic and economic data, hence its stat- etymology. The scope of the discipline of statistics broadened in the early 19th century to include the collection and analysis of data in general. 35] the first wave, at the turn of the century, was led by the work of francis galton and karl pearson, who transformed statistics into a rigorous mathematical discipline used for analysis, not just in science, but in industry and politics as well. Galton's contributions included introducing the concepts of standard deviation, correlation, regression analysis and the application of these methods to the study of the variety of human characteristics – height, weight, eyelash length among others. Fisher's most important publications were his 1918 seminal paper the correlation between relatives on the supposition of mendelian inheritance, which was the first to use the statistical term, variance, his classic 1925 work statistical methods for research workers and his 1935 the design of experiments,[42][43][44][45] where he developed rigorous design of experiments models. Statistical methods are applied in all fields that involve decision making, for making accurate inferences from a collated body of data and for making decisions in the face of uncertainty based on statistical methodology. The use of modern computers has expedited large-scale statistical computations, and has also made possible new methods that are impractical to perform manually.

56][57] theoretical statistics concerns both the logical arguments underlying justification of approaches to statistical inference, as well encompassing mathematical statistics. Mathematical statistics includes not only the manipulation of probability distributions necessary for deriving results related to methods of estimation and inference, but also various aspects of computational statistics and the design of e learning and data mining[edit]. Statistical consultants can help organizations and companies that don't have in-house expertise relevant to their particular tical computing[edit]. An example of an open source statistical article: computational rapid and sustained increases in computing power starting from the second half of the 20th century have had a substantial impact on the practice of statistical science. Early statistical models were almost always from the class of linear models, but powerful computers, coupled with suitable numerical algorithms, caused an increased interest in nonlinear models (such as neural networks) as well as the creation of new types, such as generalized linear models and multilevel sed computing power has also led to the growing popularity of computationally intensive methods based on resampling, such as permutation tests and the bootstrap, while techniques such as gibbs sampling have made use of bayesian models more feasible. A large number of both general and special purpose statistical software are now tics applied to mathematics or the arts[edit]. Initially derided by some mathematical purists, it is now considered essential methodology in certain number theory, scatter plots of data generated by a distribution function may be transformed with familiar tools used in statistics to reveal underlying patterns, which may then lead to s of statistics including predictive methods in forecasting are combined with chaos theory and fractal geometry to create video works that are considered to have great beauty. Citation needed] with the advent of computers, statistical methods were applied to formalize such distribution-driven natural processes to make and analyze moving video art. Of statistics may be used predicatively in performance art, as in a card trick based on a markov process that only works some of the time, the occasion of which can be predicted using statistical tics can be used to predicatively create art, as in the statistical or stochastic music invented by iannis xenakis, where the music is performance-specific. And geographic information systems, specifically in spatial logical ility tical addition, there are particular types of statistical analysis that have also developed their own specialised terminology and methodology:Bootstrap / jackknife ariate tical ured data analysis (statistics). It is used to understand measurement systems variability, control processes (as in statistical process control or spc), for summarizing data, and to make data-driven decisions. In these roles, it is a key tool, and perhaps the only reliable y resources ces in your article: outline of ry of probability and of academic statistical of important publications in of national and international statistical of statistical packages (software).

Of statistics of university statistical consulting on in probability and tions and major areas of tions of of ariate analysis of variance. Tulsa, ok: statistical computing te:sisters project ptive cient of l limit ncy n product-moment -and-leaf size lled tical ility ng cal hood (monotone). Hazards rated failure time (aft) –aalen al trials / ering s / quality tion nmental phic information etic / number ential equations / dynamical y of atics atical ophy of ophy of mathematics tional entation ries: statisticsdataformal sciencesinformationmathematical and quantitative methods (economics)research methodshidden categories: cs1 maint: uses editors parametercs1 maint: extra text: authors listall articles with unsourced statementsarticles with unsourced statements from april 2015wikipedia articles needing clarification from october 2016articles with unsourced statements from november 2017all articles with specifically marked weasel-worded phrasesarticles with specifically marked weasel-worded phrases from april 2014articles with unsourced statements from february 2015articles with unsourced statements from march 2013wikipedia articles with gnd logged intalkcontributionscreate accountlog pagecontentsfeatured contentcurrent eventsrandom articledonate to wikipediawikipedia out wikipediacommunity portalrecent changescontact links hererelated changesupload filespecial pagespermanent linkpage informationwikidata itemcite this a bookdownload as pdfprintable dia ansalemannischአማርኛالعربيةaragonésasturianuazərbaycancaتۆرکجهবাংলাbân-lâm-gúбашҡортсабеларускаябеларуская (тарашкевіца)bislamaбългарскиbosanskibrezhonegcatalàčeštinacymraegdanskdeutschދިވެހިބަސްeestiελληνικάespañolesperantoestremeñueuskaraفارسیføroysktfrançaisfryskfurlangaeilgegaelggàidhliggalego贛語한국어հայերենहिन्दीhrvatskiidobahasa indonesiainterlinguaᐃᓄᒃᑎᑐᑦ/inuktitutíslenskaitalianoעבריתbasa jawaಕನ್ನಡქართულიқазақшаkiswahilikurdîкыргызчаladinoລາວlatinalatviešulëtzebuergeschlietuviųlimburgsmagyarмакедонскиmalagasyമലയാളംमराठीbahasa melayumirandésမြန်မာဘာသာnederlandsनेपालीनेपाल भाषा日本語norfuk / pitkernnorsknorsk nynorskoccitanଓଡ଼ିଆoʻzbekcha/ўзбекчаਪੰਜਾਬੀپنجابیپښتوpatoispiemontèispolskiportuguêsromânăрусиньскыйрусскийscotsseelterskshqipsicilianuසිංහලsimple englishسنڌيslovenčinaslovenščinaکوردیсрпски / srpskisrpskohrvatski / српскохрватскиbasa sundasuomisvenskatagalogதமிழ்татарча/tatarçaతెలుగుไทยтоҷикӣತುಳುtürkçetürkmençeукраїнськаاردوvènetotiếng việtvõrowinarayייִדישyorùbá粵語zazakižemaitėška中文.